Case Study

Moonpig Increases Conversions by Optimizing Product Recommendations

About Moonpig

Moonpig is a UK-based retailer specializing in unique and personalized cards and gifts. With over 10,000 card designs along with a large selection of gifts, such as food, drink, flowers, personalized mugs, and much more, continually optimizing the digital experience is crucial in order to surface the right products to the right customer at the right time. A/B testing and personalization is thus an integral part of Moonpig’s brand strategy.

Moonpig is very development-driven, and invests heavily in internal machine learning and product recommendations capabilities. Having an optimization tool that both integrates with their existing ecosystem and allows them to test all of the site features is therefore very important to them.

Using SiteSpect’s Engine API is really valuable for us. It allows us to group and cohort users accurately, our engineers find it straightforward to implement tests, and we don’t have any of the flicker we had with our client-side tool.”

James Huppler, Head of Product at Moonpig

While they used to use a client-side A/B testing solution, they found it was difficult to integrate, and ran into problems with bucketing, data accuracy, and site performance.

They selected SiteSpect because of its server-side capabilities, which allows them to test all of their site features, including algorithms and product recommendations. Further, Moonpig uses SiteSpect’s Engine API implementation, meaning their traffic does not flow directly through SiteSpect.

James Huppler, Head of Product at Moonpig explains, “Using SiteSpect’s Engine API is really valuable for us. It allows us to group and cohort users accurately, our engineers find it straightforward to implement tests, and we don’t have any of the flicker we had with our client-side tool.”

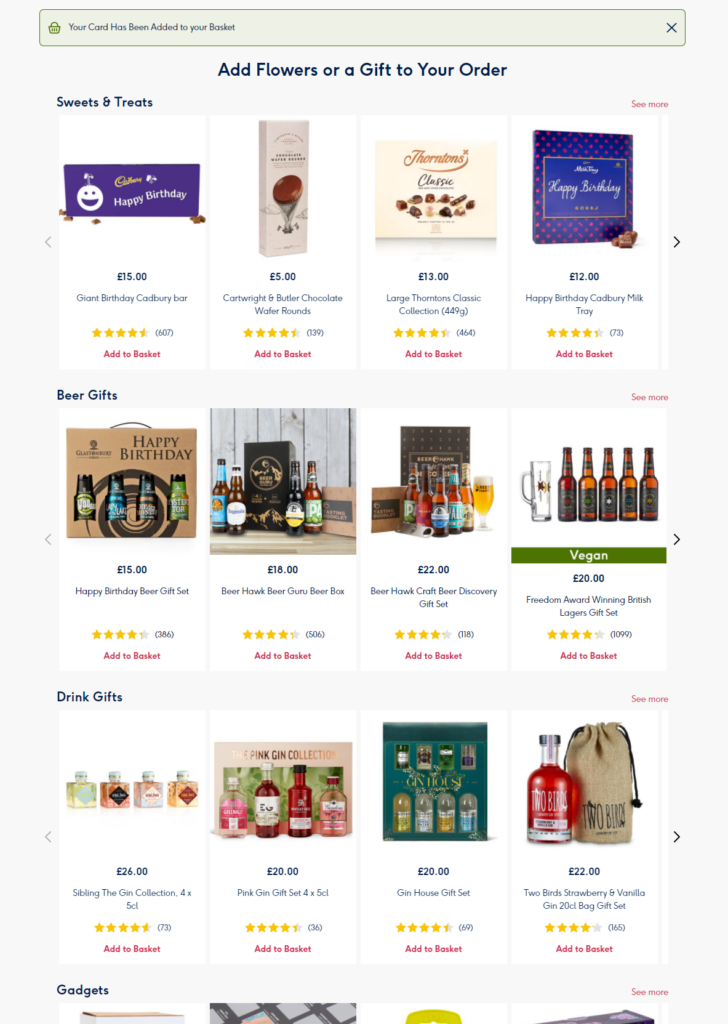

Fine Tuning Product Recommendations Display

This test focused on one instance of product recommendations in the customer journey. When a customer adds a personalized card to their cart, they get directed to a gifts page that displays related products. The team responsible for “attach” metrics — the measure of users who attach one of these recommended products to the card in their cart — hypothesized that optimizing the order and presentation of these product recommendations would improve attach rate.

The hypothesis for this test grew out of a set of customer interviews where users expressed that while the existing recommendations were relevant to the card selected, they weren’t ordered or categorized in a way that made products easy to browse.

To improve the experience, Moonpig designed a new carousel format with items grouped by category and displayed in horizontal groups.

To achieve this, they updated their recommendations algorithm and added new metadata so that the products could be effectively grouped. Items and categories that were closer matches for the customer and selected card were prioritized in the display.

Results

The new carousel structure for product recommendations proved a success. The new design saw an increase in revenue per user, while it did not change the rate of purchases. This means that the test was successful in increasing attachments without any negative impact on purchase conversions. From here, Moonpig has released this variation to 100% of traffic.