Why Your Experimentation Program May Need a Statistics Refresher

By Merritt Aho

November 5, 2019

Share

The digital world needs more experimentation. But it also needs better experimentation. A/B testing without strong measurement discipline is a lot like the food pictures people post on Instagram: impressions of a reality that doesn’t exist. This happens a lot, often within experienced teams, for several reasons.

At the start, it helps to acknowledge that we, the experimenters, are not unbiased observers of data. Yes, we love data. Yes, we glory in data-driven decision making. But we also love to win, to find the gold nuggets that will make our companies successful, our egos pleasantly plump, and our resumes impressively decorated with numbers. But this preference, this bias, however slight, compromises our judgment and interpretation of data from the get-go.

Compound this phenomenon with the limitless options and methods at our disposal to misrepresent and abuse statistics and what you emerge with is something that resembles data-driven decision making, but in reality is little more than a tangle of assertions that are extremely difficult to dispute without full visibility into the data. In short, we can make powerful arguments that suit our agenda, but peel back enough layers of the onion and you may have an empty core.

I think it’s probably secondary reasoning, but our intuitions around probability and significance can sit far off the mark. Those small biases then lead the way to data-influenced but intuitive decision making. Thus, statistics.

An Example of Statistics vs. Intuition

I love to reflect on the so-called “Monty Hall” problem made famous by the old game show Let’s Make a Deal.

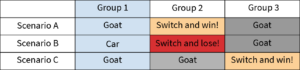

Most of us will reason that there’s no advantage to switching. That it’s a 50/50 chance of winning either way. But here we’d be dead wrong. Switching would give you a 66% chance of winning while staying would give you only 33% chance. A quick illustration will help you “see” the fallacy pretty quickly.

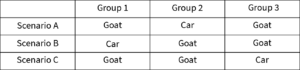

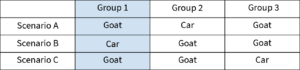

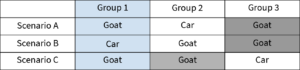

You start with 3 possible scenarios:

Now, in the first step, you choose Door 1.

In step 2, the host reveals a goat behind one of the other doors.

Now, in how many of the 3 Scenarios would switching get you a car?

Seen at this level, it’s a simple problem with a clear solution. But our intuitions, even when scrutinized, have a really hard time picking up on that initially. Likewise, when we look at data comparing 2 groups and observe a difference between them, it’s hard not to immediately judge one as being superior to the other even while scenarios exist in which what you’re observing is completely random or your data set does not reflect the true difference in the groups. Thus, statistics.

The Place for Experimentation

So what of our intuitions? Should we simply ignore them? Should we feel so uncertain about our judgment that we do nothing without A/B testing? Should we discount years of experience doing UX, design, user research, analytics, ecommerce, or other digital marketing functions? What is experience worth anyway?

I’m of 2 minds here. On the one hand, our intuitions and judgments should become better over time as we run our own experiments and witness the results of many others’ experiments. This should lead to better, more impactful ideas making their way into our pipeline of hypotheses.

On the other hand, we operate in an environment and era of rapid change. The competition changes. Our customers needs and expectations change. Design sensibilities change. Technology changes. Economies change. And all of these changes threaten to undermine the ongoing advantage of any given winning treatment and our refined judgment along with it. We see this in action in the phenomenon known as Regression to Mean where performance gains see diminishing returns over time. Such unexpected and unwelcome trends have been perplexing A/B testers for years. It seems that even after running a controlled experiment, results are not guaranteed and we should anticipate the benefit to lead a short but joyous life. The uncertainty produced is akin to that described brilliantly in Dr. Seuss’ whimsical yet insightful message to youth:

“You will come to a place where the streets are not marked. Some windows are lighted. But mostly they’re darked. A place you could sprain both your elbow and chin! Do you dare to stay out? Do you dare to go in? How much can you lose? How much can you win?

And if you go in, should you turn left or right…or right-and-three-quarters? Or, maybe, not quite? Or go around back and sneak in from behind? Simple it’s not, I’m afraid you will find, for a mind-maker-upper to make up his mind.”

–Dr. Seuss Oh the Places You’ll Go!

Until such a time as design innovation ceases, competitors stop striving, and consumers become reliably predictable, controlled experimentation remains the best way to go about de-risking experience changes for consumers at scale.

But even though experimentation is often a highly affordable option for reducing uncertainty, most organizations have many more decisions to make than they can fund A/B tests for. Indeed, many decisions are more trivial than a A/B test can justify. There are also more ways to reduce uncertainty. Experience, your own and that of others, does count for something. Data analysis counts. Usability A/B testing counts. Surveys count. Customer support history counts. Records from sales interactions count. Heuristics and “best practices” count. Shoot, even your mom’s opinion counts (a lot more than one might think!). All of these sources provide the kind of evidence that we should demand for even the most trivial of risks. We should also scrutinize all sources of evidence and acknowledge, even, expect them to produce errors at some cadence.

When the chances for being wrong multiplied by the cost of being wrong is greater than the tolerance for loss, then it’s time to either abandon ship or gather more evidence. And while A/B testing is not the panacea of de-risking business decisions, it might be the closest we can get.

Merritt Aho is an Optimization Director at Search Discover. Learn more about him and his work here.

To learn more about SiteSpect, visit our website.

Share

Suggested Posts

Subscribe to our blog: