An Interview with Journey Further: Is A/B Testing Impacting Your SEO?

By SiteSpect Marketing

May 25, 2022

Share

Journey Further is a performance marketing agency delivering clarity at speed. They specialize in paid media, organic search and conversion optimization. Conversion Director, Jonny Longden, Conversion Operations Manager, Jon Crowder and SEO Technology Director, Steve Walker discuss the impact of client-side A/B testing tools on SEO.

Jonny: We’re increasingly being asked by our clients whether client-side A/B testing tools are affecting Cumulative Layout Shift (CLS) and therefore having a negative impact on SEO.

No idea what I’m talking about? We’ve dug into this topic, enlisting help from Jon Crowder and Steve Walker here at Journey Further, to get to the bottom of it.

What is Core Web Vitals and CLS?

Steve: Page speed and user experience has been a big focus for Google for the past few years with multiple attempts to incentivize webmasters and digital marketers to speed up their sites.

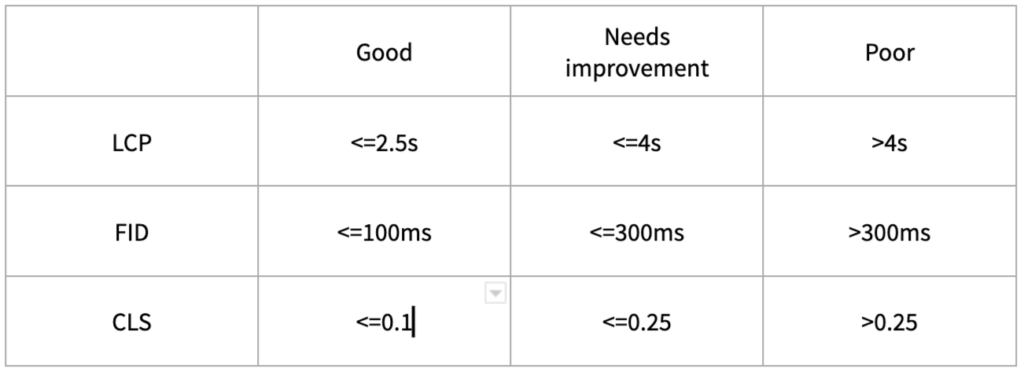

The most recent iteration of this is ‘Core Web Vitals’ (CWV) – a set of metrics aimed at improving the user experience across the web, focusing on three main areas: loading performance, interactivity and visual stability. If the website passes the three metrics listed below, it receives a ranking boost:

Largest Contentful Paint (LCP) – This metric measures loading performance and reports the render time of the largest image or text block being visible within the viewport. Google states that to provide a good user experience, LCP should occur within 2.5 seconds of when the page first starts loading.

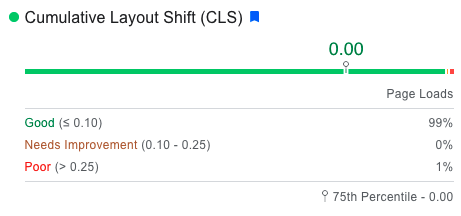

Cumulative Layout Shift (CLS) – This metric measures the visual stability of the page, for example, layout shift can be caused by elements resizing or being added during the loading process. Layout shift is calculated based on the size of the element and the distance it’s moved and is only counted if the shift isn’t due to user interaction. Google’s benchmark for this is to maintain a CLS of 0.1 or less.

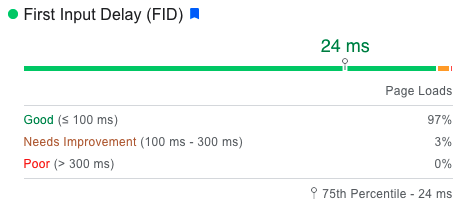

First Input Delay (FID) – This metric measures the time from when a user first interacts with a page (i.e., when they click a link) to the time the browser is able to process and react to that interaction. According to Google, pages should have an FID of 100 milliseconds or less.

Page speed has been important to SEO for a while now, but with clear messaging from Google on its importance, a simplified set of metrics and increased availability of data (Core Web Vitals reports in Google Search Console), it’s become a bigger priority and as a result why other disciplines (like CRO) need to be conscious of it.

How is it measured?

Steve: Google collects real user experience data from the Chrome browser as part of the Chrome User Experience Report program (CrUX). This data is collected at a URL level and is the basis of all CWV measurements. There are a set of benchmarks for each metric as detailed below:

Google considers a page passing Core Web Vitals if it meets the ‘Good’ thresholds for 75% of visiting users.

How and why might client-side A/B testing affect this?

Jon: Client-side testing, by its very nature, is a trade-off between engineering complexity and simplicity. To reap the benefits of client-side testing, which are that tests become simpler and faster to build and run, thereby facilitating rapid experimentation leading to business growth, the other side of that coin are elements like moderately worse page performance. It’s sort of like using any web tool, even analytics. Having an analytics tool on your site will make it ever-so-slightly slower, however, the benefits you gain from understanding how that web page performs significantly outweighs any minor loss from core web vitals.

To understand how and why client-side testing tools behave this way, we must understand how websites work. I’m going to give a very simplistic version here as the modern web can be much more complex than this, however, by explaining the core principles we can understand both how and why client-side testing tools work as they do.

When you view a website, you’re looking at an interpretation of code. You input a URL into your browser’s bar, and that web address is a friendly shorthand that tells your browser which address your desired webpage lives at. Your request goes knocking on the door of a hosting provider, and then servers at that hosting provider answer back with a file containing lots of code.

Your browser then interprets that code and shows you the interpretation as it understands it. Everything you see on the page, whether it’s just basic text or images or structure of the site is delivered in code format and then interpreted by the browser to display that code.

The way client-side AB testing tools work is that we ask our tool to make a change on the page for us. Let’s say we’re changing a red button into a blue button. The code for the original underlying page is requested from the server, and it must be fully received before the change can take place.

The tool will run a series of logic checks, usually something along the lines of “is this the right web page? If yes, then run this change.”

Then it will search within the delivered code of the page to find the button color. It might find in the CSS of the underlying page that

.mainbutton {background-color: red;}

And when that is discovered to change that to:

.mainbutton {background-color: blue;}

This happens very quickly. Within fractions of a second. A lot of the time it’s invisible to the human eye, however, there are some circumstances where the change is visible even though it is quick. We call this flicker, and it can, by itself, impact the outcome of an experiment.

Let’s say you’re loading in a large, heavy hero image, or some ultra-hi-res product photography. We want to replace that with equally heavy imagery, but of a different product. It’s highly likely that you’d experience flicker unless something was done to manage that change. To mitigate the impact of this, the way most client-side AB testing tools will handle that problem is to hide a section of the web page, sometimes even the whole page body, until the change is made. The perception the user would have is the page loaded normally, and that no elements have flickered.

The upsides to this are clear – better quality experiments, higher quality data. The downsides are mostly in relation to page performance. Loading two heavy images is slower than loading one heavy image. Delaying loading until other processes are ready may cause the perception of slower loading, even though the reality is that the parts were delivered and just not ready to be viewed yet. Another downside is that this process causes content layout shift each time you experiment. In an ideal world, search algorithms that monitor core web vitals would be sophisticated enough to understand that an experiment is being run on the website, and it’s not simply poor design, however, in practice, even Google’s own client-side experimentation tool (Google Optimize) doesn’t avoid this issue.

Client-side experimentation tools are exceptional for their ease of use, their simple implementation and their flexibility, and many vendors code snippets’ are highly optimized for performance already. That said, there is and will always be an impact on core web vitals when we experiment this way. It’s how the tool works.

How can we tell what the impact is?

Jon: Impact on core web vitals may be measured in several ways, however, we shouldn’t accept that the best version of the website is always only the fastest version of that website. Conversion rate optimization unlocks millions of pounds of incremental revenue for the brands we work with because they’re able to be dynamic, and they’re able to move quickly and respond to their customers’ needs online. That’s a result you’d struggle to match with website performance improvements alone.

It is possible to run web vitals reports on your website before and after implementing a tool and see the real effects on those performance metrics. When we’ve done that, we’ve seen the impact to be present but minimal.

You should also consider the impact of having versus not having, the ability to rapidly A/B test ideas online and understand if they perform for you and for your customers. The ability to never release an underperforming change ever again. That’s powerful.

What’s the verdict?

Jon: You must weigh up what matters to you. I think that 99 times out of 100 you’re going to see much more benefit from having an experimentation tool that’s flexible and simple, and an experimentation program that’s agile and informative than you will from getting your website to load fractions of a second faster. I think you’re going to see more conversion from being able to research and develop your product online. That said, there are other solutions to allow you to have the best of both worlds.

What’s the solution?

Jon: Not every experimentation tool is located within the client’s browser. Experimentation can be done entirely on the server-side. It can be integrated with your development operation and the experiments themselves would be perfectly invisible to the end-user. This is how Spotify and Apple run experimentation, for example. The downside to this approach is that it’s expensive. You must hire developers, and maintain a development backlog, and by the time you’ve done all that you are now a tech company.

The more practical middle ground is a hybrid tool. Tools like SiteSpect which sit between the client browser and the server, and intercept and change the code before it’s delivered to the browser. This allows you to continue with the flexibility and ease of use of a client-side tool but to also reap the benefits of not passing additional processing onto the client, and having the experience delivered as if it were direct from the server. An elegant compromise.

For information on how SiteSpect works with SEO, visit our How SiteSpect Works page.

Want to see SiteSpect in action? Schedule a personalized demo!

Share

Suggested Posts

Subscribe to our blog: